How to Install Spark layer core on Windows 10

7m

7m

0 comments

0 comments

This tutorial will guide you through the process of installing the Spark layer on Windows 10. Before we begin, it’s important to understand the concept of a network layer core. Imagine you are in a library with rows of shelves containing books. There is also a larger pathway that everyone uses to move in and out of the library and between the shelves. This larger pathway represents the layer core. Apache Spark is a powerful framework with multiple layers, including Spark Core, Spark SQL, Spark Streaming, GraphX, and others.

What is Apache Spark?

Apache Spark is a framework that is open-sourced. It was developed in 2009 and became open-source software in 2010. But I’m 2013, it was given to the Apache Foundation, And it became popular after that. Spark is known for supporting a large number of programming languages, such as Java, Python, and Scala. It can also handle massive amounts of data and is recognized for its speed.

What is Apache Spark used for?

Apache Spark is utilized for machine learning, artificial intelligence also, recommender systems, and data analytics because it’s a powerful tool it is used in data processing. It is specifically effective in handling big data, making it an ideal choice for big data developers.

One of Spark’s main advantages is its ability to process data in real-time. This real-time analytics capability will enable it to quickly analyze incoming data and provide essential senses, keeping you updated with the latest information as it appears.

Here is a tutorial on how to complete this task.

Requirements

- Java

- Python

- Winutils

- Spark

- 7-zip or WinRAR

Note: Make sure that Java and Python are installed on your device. To verify their installation, enter the commands below in your CMD.

java --version python --version

Setup Spark Layer Core on Windows 10

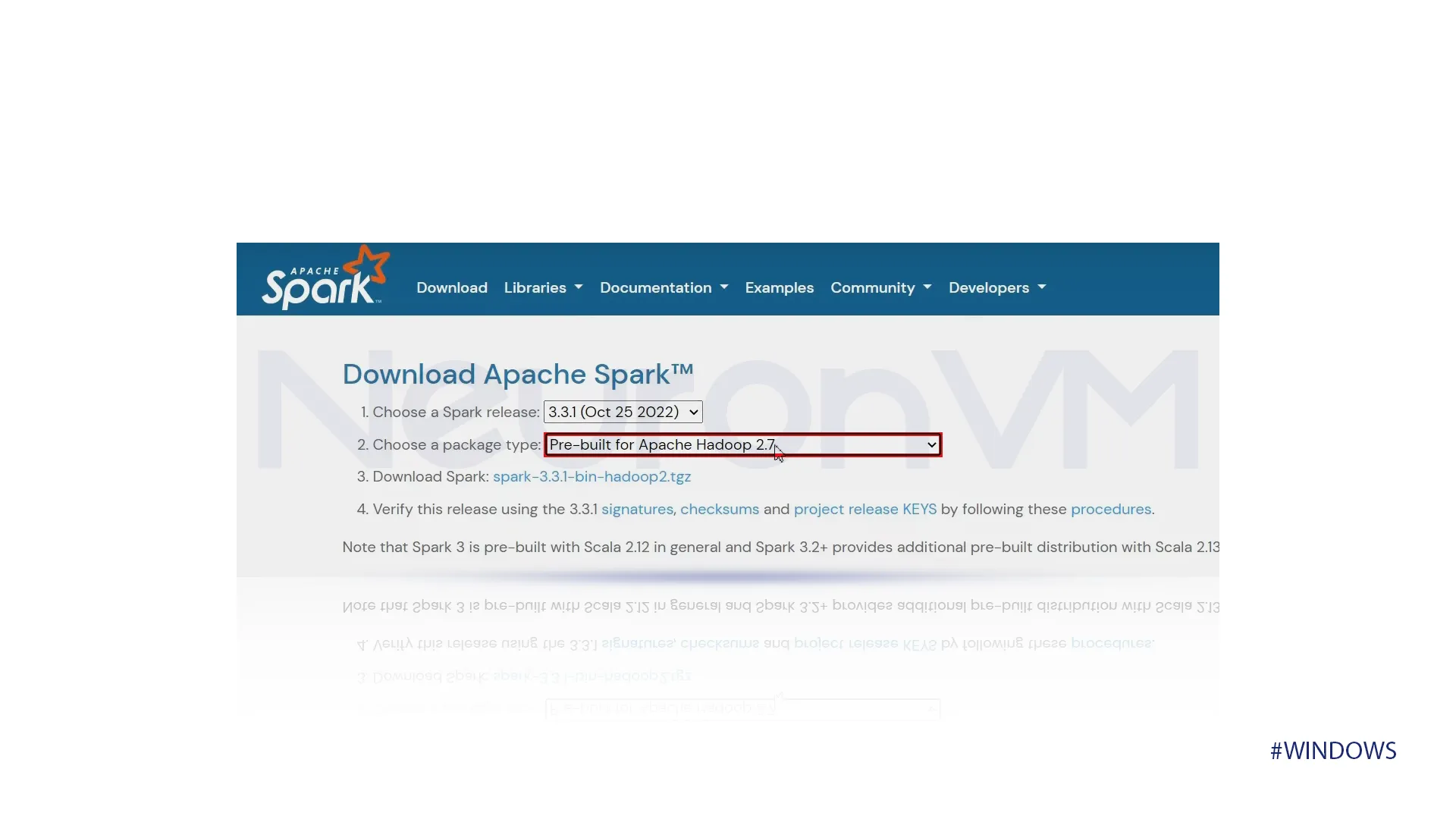

Step 1) Head to Spark Apache’s original website and then you want to select the latest release version available, and for the package type, choose pre-built for Apache Hadoop. After that, you can download Spark.

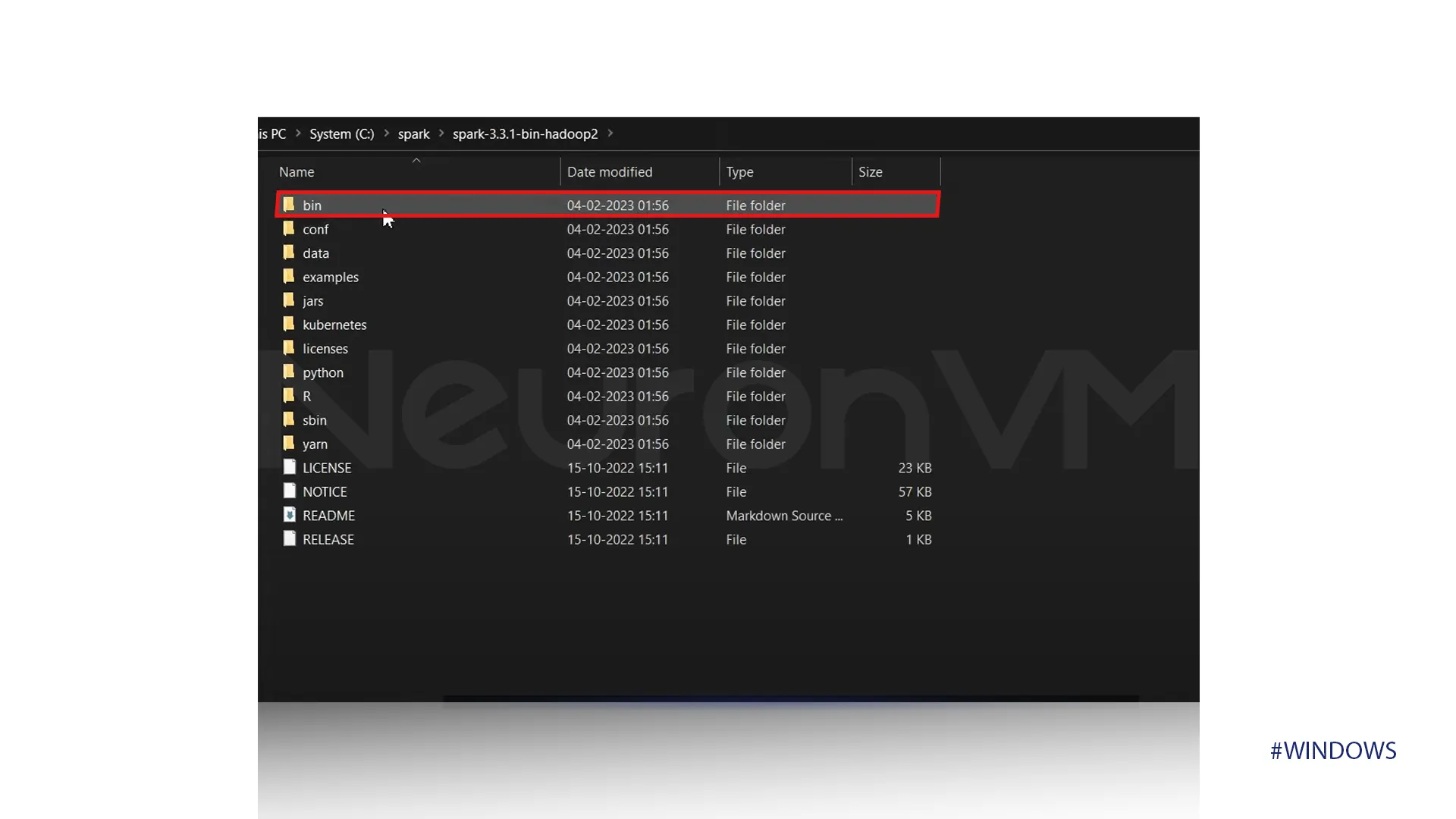

Step 2) Once the download is completed, go to your downloads folder and extract the file since it is a tar file. After that, you are going to be able to see a bin directory containing all the shell command scripts.

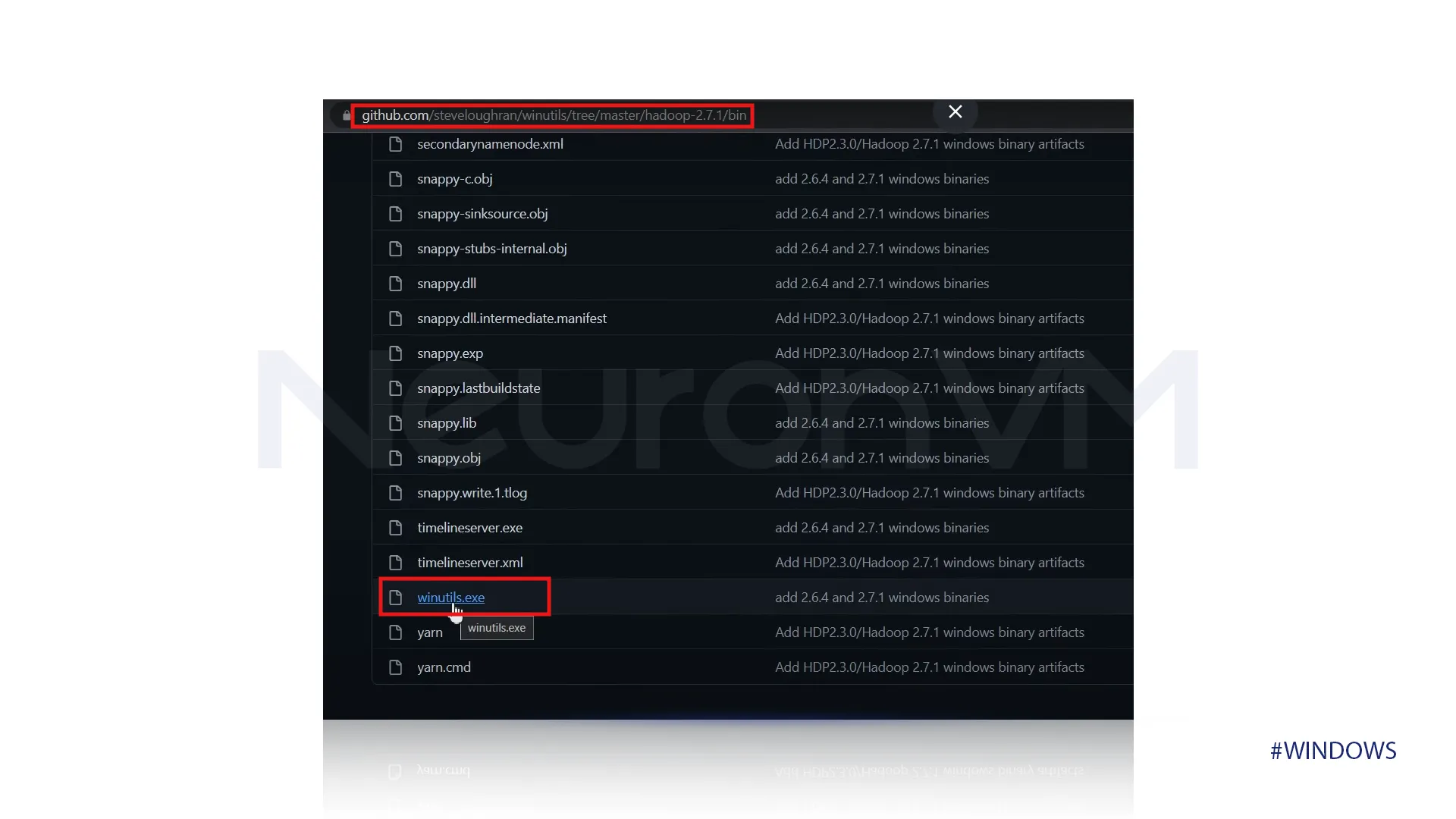

Step 3) To start the Spark shell, you first need to download WinUtils. You can easily receive it from the GitHub link provided here to avoid any issues. After downloading, navigate to the ‘win’ directory where you will find the file named ‘winutils.exe.’ Click on it to complete the download.

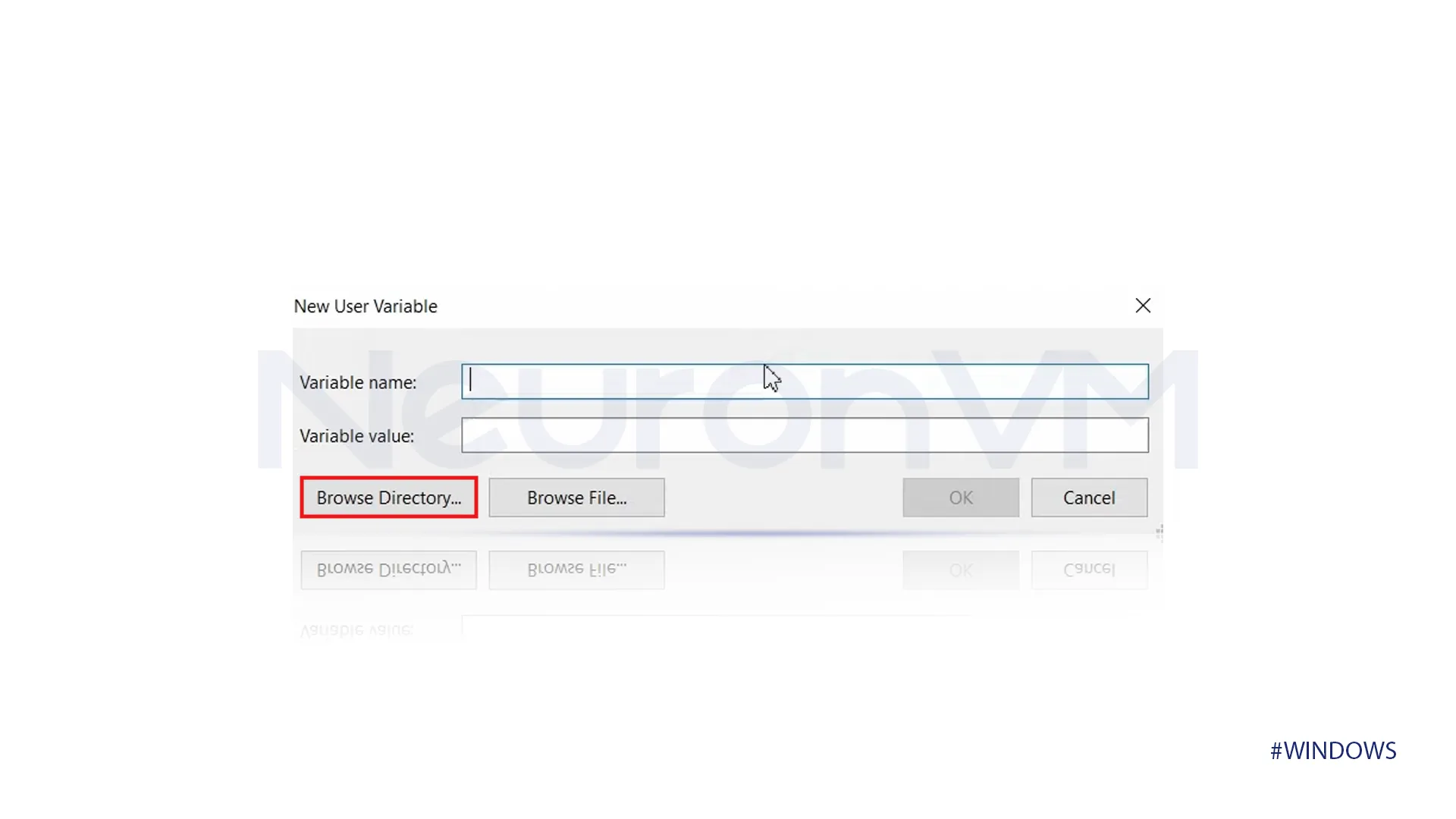

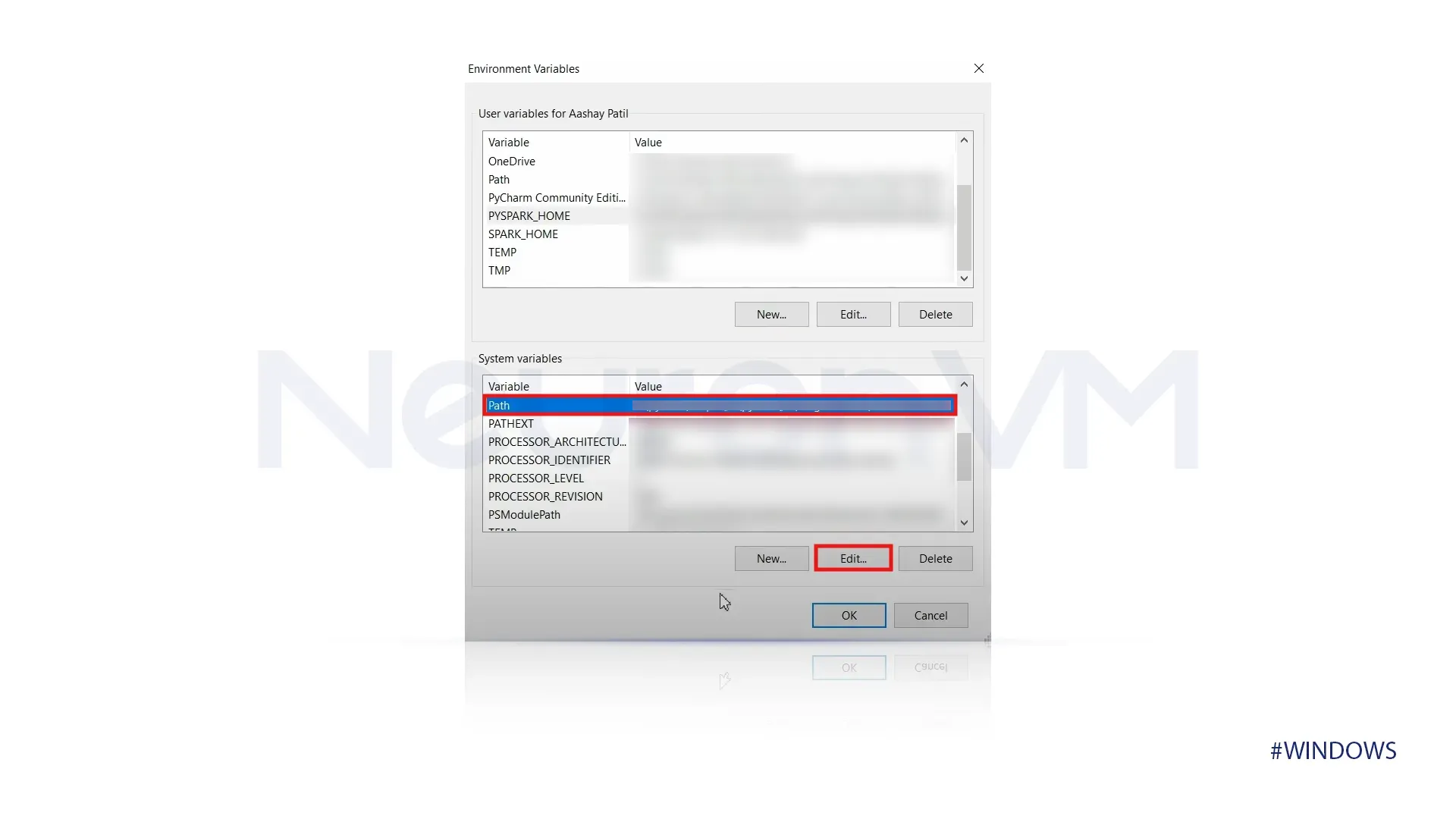

Step 4) To create the Environment Variables on Windows, open the Environment Variables settings and add the variables Hadoop, Java, Spark, and Python one by one. Click on the browse directory button and carefully browse your directory to select the appropriate folder for each variable.

Step 5) After that, you need to create a path. Go to the “Path” in the system variables and click on “Edit.” You will need to add the paths for Java, Spark, and Hadoop, including their individual bin directories. Make sure you have the Java_Home variable set to the bin directory; you should follow the same process for setting up Spark and Hadoop.

Note: Click on “New,” then add your path and directory.

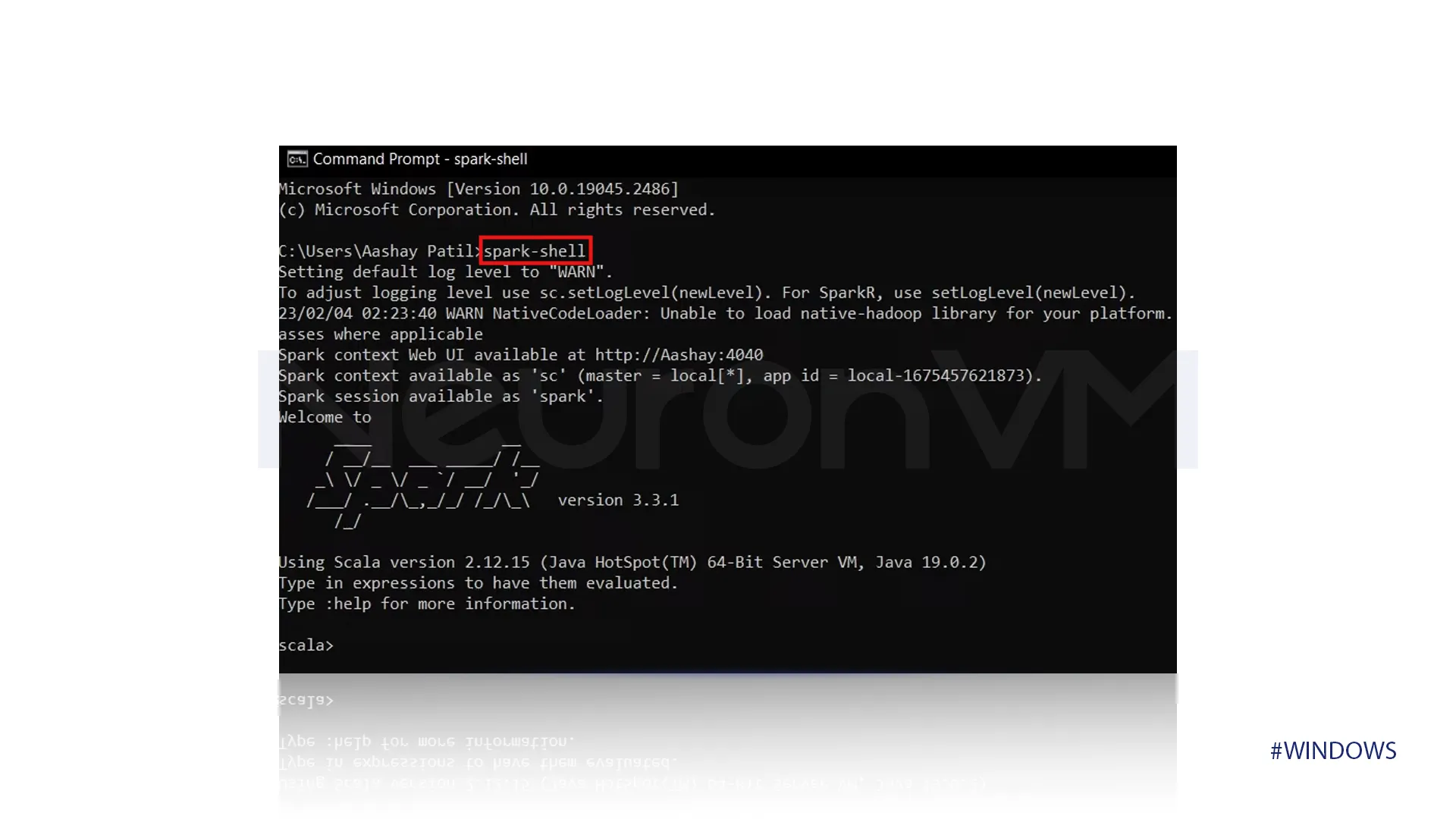

Step 6) To verify that the installation of Spark on Windows 10 was successful, open CMD and run the command below. If it shows the version of Spark that you installed previously, then the installation was successful.

spark-shell

Conclusion

This article explains the concept of the network layer, Apache Spark, and its usage. It also covers the requirements for installing Spark and provides a complete step-by-step guide for installation on Windows.

Did you know that one of the reasons Apache Spark’s performance is improved is its use of cache memory?

If you’re interested in Windows tutorials, visit our website neuronVM.

You might like it

Linux Tutorials

How to Connect to Linux VPS from Windows

Windows Tutorials

How to Connect to VPS on Windows 11

Linux Tutorials

Step-by-Step Guide: Enable UEFI Boot on Linux Mint 21.3